Incremental Training

Recently I was reading this awesome PhD thesis by Abby Levenberg on Stream based Machine Translation. In this kind of scenario an MT system is fed a stream of data from time to time instead of all data at once. Batch learning fails in this scene because we cannot train the data everytime a stream is fed to a system, its a painfully long process. Thus, it marks the dawn of incremental learning, the 2.0 version of batch learning.

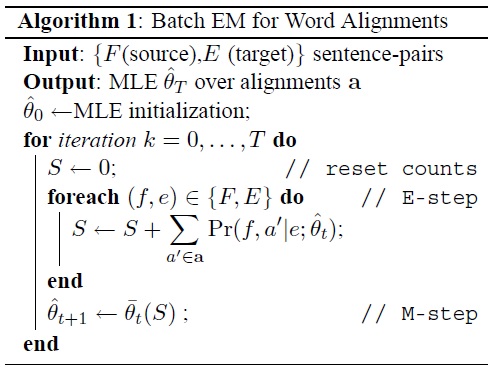

The process which takes the most of the time in training MT models can be said to be aligning the words in the sentence. This is a generative process which uses EM algorithm to align these words. Well it is really difficult to explain EM algorithm with a short description. As the name suggests the algorithm consists of two basic steps of Expectation and Maximization. First, the algorithm assumes all alignments equally likely, giving us the alignment probabilities. Using this probability model we collect the counts(sufficient statistics) for each pair of sentence (Expectation step). Using these fractional counts we estimate the alignments probabilities by normalizing them (Maximization step).

A pseudo code of EM taken from Levenberg’s paper

A modern version of EM is stepWise EM which uses stochastic approximation at the expectation step to collect the counts. Further, instead of clearing the counts at each iteration it keeps a track of the old counts and linearly interpolate them with the current counts to estimate the new alignment probabilities.

A pseudo code of both these algorithms taken from Levenberg’s thesis

PS :

1. The thesis also shows that incremental algorithm performs almost the same as batch.

2. Online learning, incremental learning are quite hot topics in NLP nowadays.